Which AI Avatar Software Offers the Best Facial Expression Controls in 2025?

AI avatars are becoming more and more realistic – so in 2025, which is the best AI avatar software with advanced facial expression controls? Let’s compare tools.

AI avatars are becoming more and more realistic – so in 2025, which is the best AI avatar software with advanced facial expression controls? Let’s compare tools.

We’ve just built the most complete FREE resource to leverage AI avatars in your business. We’ve centralized 50 use cases across 4 categories (Personal Branding, Marketing and Sales, Internal and Enterprise, and Educational and side-hustles). You can access it here NOW. Enjoy :)

AI avatar software with advanced facial expression controls is relatively new, but the technology has come a long way in a short time.

A few years ago, it was enough for a digital face to move its lips in sync with the words and look vaguely realistic. Now, audiences want hyper-realistic, emotive avatars that are indistinguishable from human actors — they want to see that quick flicker of recognition, a fleeting smirk, the faint facial crease when someone is thinking…

Whether you’re producing YouTube explainers, brand videos, or online courses, how well your avatar conveys emotion can directly affect how your audience connects with your content and whether they stay engaged.

In this article, we’ll look at the best AI avatar software with advanced facial expression controls, comparing the most popular tools of 2025.

AI avatar software with advanced facial expression controls is everywhere right now. But back when avatars were simpler, matching audio to mouth movement was an achievement, and true expressiveness was something creators could only dream of.

But today’s viewers have got extremely good at spotting fakes, especially on personality-driven platforms like TikTok and YouTube Shorts. They might not be able to articulate what’s wrong, but they can feel it when something doesn’t look human.

This new level of expectation has pushed developers to go beyond surface-level animation, using advanced emotion mapping, micro-gesture tracking and real-time facial mirroring to create hyper realistic AI personas that look just like real people. Enter: AI avatar software with advanced facial expression controls.

Done well, these avatars can recreate the tiny muscle shifts that define emotion, such as a raised eyebrow of surprise, the narrowing of eyes in thought or the softening around the mouth when someone shows empathy.

These details are no longer just a “nice to have.” Data shows that expressive avatars can actually drive long-term engagement online. Yet, one challenge still looms large: the uncanny valley effect. This is where an avatar looks almost real but not quite (maybe the smile lingers too long or the eyes don’t quite follow the tone). This can break trust instantly and undermine your credibility as a creator, brand leader or entrepreneur.

So how do you get around this when using AI avatar software with advanced facial expression controls?

When it comes to AI avatar software with advanced facial expression controls, there are several standout tools on the market. So how do they stack up in terms of avatar realism and expressiveness?

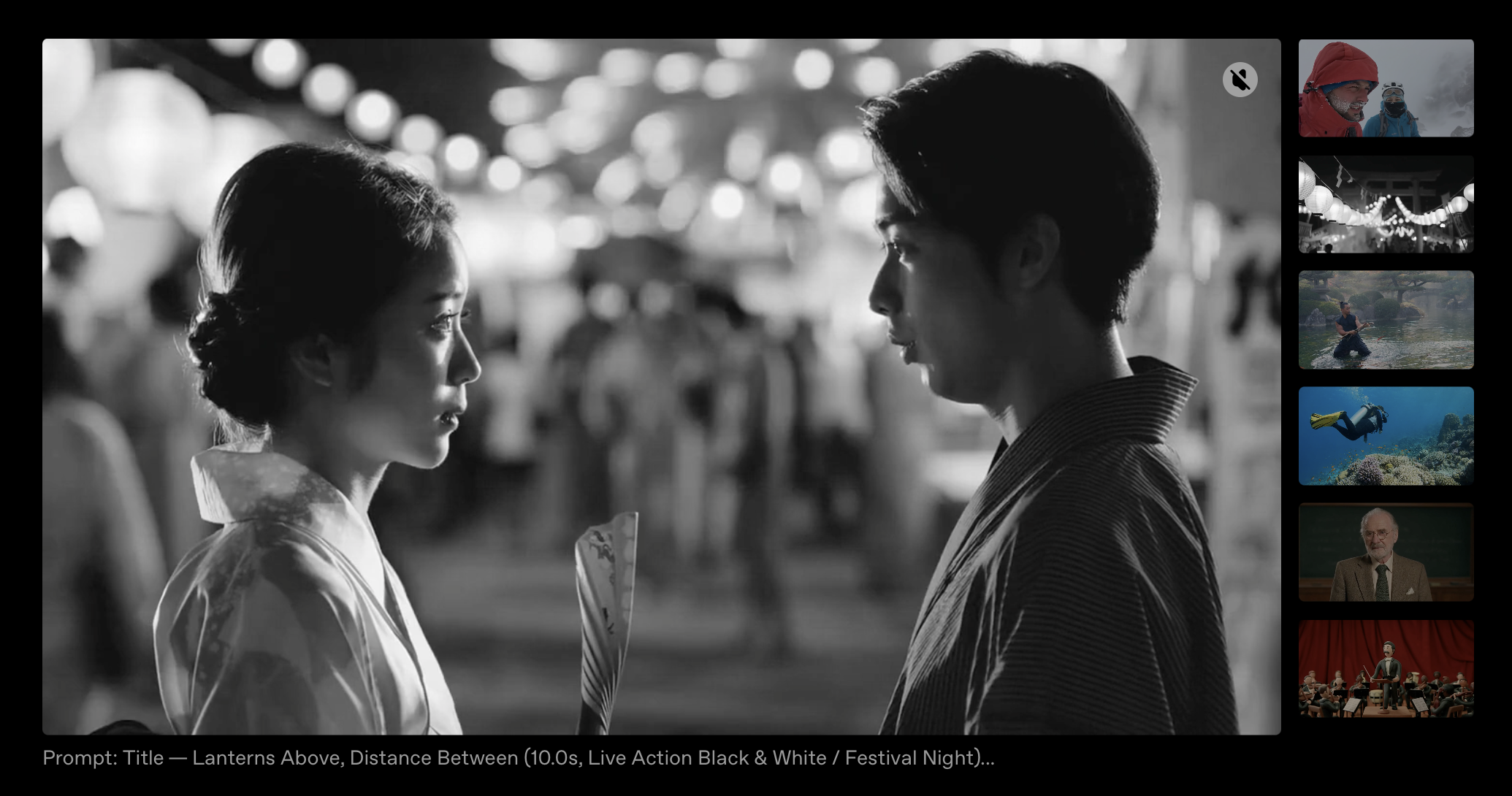

Sora is the go-to example of AI avatar software with advanced facial expression controls. Its avatars are beautifully rendered, with lighting and detail that often look like real cinematography. However, when those avatars start to move and talk, the cracks begin to show.

Subtle shifts in emotion don’t always land, and transitions between expressions can feel abrupt and unnatural. Sora generally shines in scripted scenes where emotions stay consistent, but it’s less convincing in natural, flowing conversations.

Onto the next AI avatar software with advanced facial expression controls! From the neck down, Nano Banana’s avatars are impressively fluid, with believable gestures, posture and pacing. Their faces, however, tells a different story, mainly because expressiveness relies on pre-set templates which make reactions feel a little too rehearsed.

The result is an avatar that moves like a person but doesn’t quite feel like one. This tool is great for stylized animation and storytelling, but is less suited to content that relies on authenticity and emotional nuance.

Google DeepMind’s video generation model tried a fresh idea: emotion tagging through text. With this tool, you can literally describe how you want a line delivered (such as serious, hopeful or amused) and the system will try to interpret it.

As far as AI avatar software with advanced facial expression controls goes, Veo is an innovative step in the right direction, but the execution isn’t quite there. Veo struggles with context, taking emotional tags at face value instead of reading them in relation to the rest of the script. When tone shifts mid-sentence, the avatar often can’t keep up. A line meant to sound playful-then-sincere might come across as mechanical or robotic.

Despite huge advances in AI avatar software with advanced facial expression controls, most tools still stumble when emotions overlap.

Bad timing can also betray the illusion of reality. If an avatar’s mouth moves a fraction ahead of the words, or if the face reacts just a beat too late, the human brain catches it instantly. We’re hardwired to read faces, and even the smallest delay signals “fake.”

Then there’s the issue of interpretation, as AI still struggles with subtext and tone. A simple line like “That’s just perfect” could be genuine, sarcastic or resigned. Most AI avatar software with advanced facial expression controls has no way of knowing which meaning you intend unless you explicitly state it, and even then it’s hard for AI tools to present nuanced emotion.

With all of these minor errors, many AI avatars remain slightly hollow, no matter how technically advanced they are. So what’s the solution?

Argil approaches AI avatar software with advanced facial expression controls differently. Instead of switching between single, predefined emotions, our system blends them dynamically, letting avatars express multiple feelings at once.

Under the hood, Argil maps over 300 micro-facial points, tracking even the smallest muscular changes. This means you’ll see genuine-looking eye crinkles during smiles, natural jaw tension during deep focus and the tiny, almost imperceptible shifts that make a performance feel alive.

Our platform is designed to keep everything in sync, aligning speech, posture and expression automatically. If a line becomes more intense or a sentence softens halfway through, our avatars adjust fluidly, not mechanically.

Argil also allows you to analyse the tone of a script and fine-tune expression intensity automatically. With our state-of-the-art AI avatar software with advanced facial expression controls, you can preview and adjust expressions in real time without having to re-render entire scenes.

Sora, Nano Banana and Veo 3 have all pushed the AI avatar field forward in important ways, but when it comes to AI avatar software with advanced facial expression controls, Argil currently feels closest to bridging the emotional gap. Our nuanced motion, adaptive intelligence and expressive range bring avatars within touching distance of human realism.

So why does this matter? Emotional expression has become one of the most powerful drivers of engagement in digital media. For creators who want connections to feel genuine, Argil’s AI avatar software with advanced facial expression controls shows what the next generation of AI avatars can do: not just simulate emotion, but share it.

Sign up today to create your realistic AI avatar and start generating truly emotive content!