What Can We Expect from Sora 2 After its Moderation Issues?

Sora 2 is highly anticipated, but will it address the real-world limitations, such as the “Sora 1 moderation issue?”

Sora 2 is highly anticipated, but will it address the real-world limitations, such as the “Sora 1 moderation issue?”

We’ve just built the most complete FREE resource to leverage AI avatars in your business. We’ve centralized 50 use cases across 4 categories (Personal Branding, Marketing and Sales, Internal and Enterprise, and Educational and side-hustles). You can access it here NOW. Enjoy :)

If you've been following AI video generation, you've probably heard the buzz around Sora 2.

OpenAI's text-to-video tool first emerged in 2024 with professional-looking demos that really raised the game for AI video generation, but there were (and still are) significant problems with the first model. The latest iteration launched in late September and is currently in a phased rollout, available only via invitation for certain users in the US and Canada.

Last year, early Sora users ran into frustrating moderation blocks that killed creative momentum and restricted access to video generation tools for seemingly no reason. Now everyone's wondering – can Sora 2 solve the Sora 1 moderation issues and live up to its hype? Let’s dive in.

Sora 2 is the newest iteration of Sora – OpenAI's attempt to turn text prompts into videos using artificial intelligence.

The creators of Sora used diffusion-based learning, trained on massive datasets of videos and images until the model learned how motion, lighting and continuity work. The result is that you can type something like "a cat wearing sunglasses riding a skateboard through Tokyo at sunset" and get a cinematic-style video that looks scarily realistic.

What made Sora 1 stand out was its ability to maintain visual coherence across longer sequences. With earlier AI video tools, objects would morph, lighting would shift randomly and longer videos looked janky and unprofessional.

One of Sora’s key strengths was its ability to keep things consistent, opening up possibilities for genuine, cohesive storytelling — something we know is important for digital creators. Hopefully, Sora 2 will only improve on this functionality, but that remains to be seen!

So before we get into Sora 2, let’s look at what the first model got right, and where it went wrong.

Sora 1 genuinely impressed a lot of people when it dropped. The visual quality was legitimately impressive, lighting stayed consistent across cuts, camera movements felt natural and multi-object interactions actually worked. If you told Sora to show a dog jumping onto a couch, the dog would move like a real dog would, with no robotic or unnatural movements.

The language understanding was also surprisingly good. You could feed Sora nuanced prompts with specific details and it would paint an accurate picture.

"A woman in a red coat walking through falling snow at dusk" would generate exactly that, with the right mood and atmosphere.

For a first-generation model, it was a massive leap forward. AI researchers were genuinely excited about how it bridged the gap between static image generation and full video synthesis.

But then you actually tried to use it, and the problems became obvious fast.

Even if you had access to Sora 1, you'd constantly run into moderation blocks that made no sense – such as the so-called “Sora 1 moderation issue.”

Clearly, moderation is necessary in case a user tries to generate something problematic or illegal. But with the Sora 1 moderation issue, videos were getting blocked for completely harmless prompts.

A knight walking in the rain? Blocked. A time-lapse of a sunset over mountains? Blocked. Sometimes it felt completely random.

When Sora rejected a prompt, you'd get an error message like this:

Error: Job terminé avec statut: failed

{

"error": {

"code": "moderation_blocked",

"message": "Your request was blocked by our moderation system."

}

}

And that was it. No explanation of what triggered the block, no suggestion for how to rephrase your prompt, just a dead end. For creators trying to experiment and iterate, this was infuriating, and many switched to other tools.

The filters were so aggressive that they blocked legitimate creative work along with actually problematic stuff. This became symbolic of a bigger tension in AI development: how do you balance safety with usability?

Other platforms have figured out better approaches. Tools like Argil implement safety systems that block genuinely harmful outputs while keeping the creative flow intact. They give users feedback and let them adjust instead of just throwing up a wall – this is what Sora 1 got so wrong, and what Sora 2 will hopefully fix.

So what would make Sora 2 worth the wait? Based on what content creators have been asking for online, here's what needs to happen:

First and most obvious, Sora 2 needs to fix the Sora 1 moderation issue. The new model should inform users why prompts get blocked and how to adjust them, giving people a chance to learn the boundaries instead of just shutting them down. The key is making moderation feel like a helpful guide instead of an arbitrary censor.

This is the most requested feature by far: the ability to generate the same character across multiple scenes. If you're trying to tell a story, you need your protagonist to look consistent from shot to shot. Sora 1 couldn't do this reliably, and Sora 2 needs to nail it.

Imagine being able to create episode-like sequences where characters develop and narratives progress – that’s what modern creators actually want to build.

Sora 1 had issues with temporal stability. Videos would jitter or have weird frame inconsistencies, which made them look unprofessional. Sora 2 needs tighter physics simulation and smoother frame consistency.

Creators need API access so they can integrate Sora 2 directly into their editing workflows. Right now, Sora exists as an isolated demo tool that doesn't integrate into actual production pipelines.

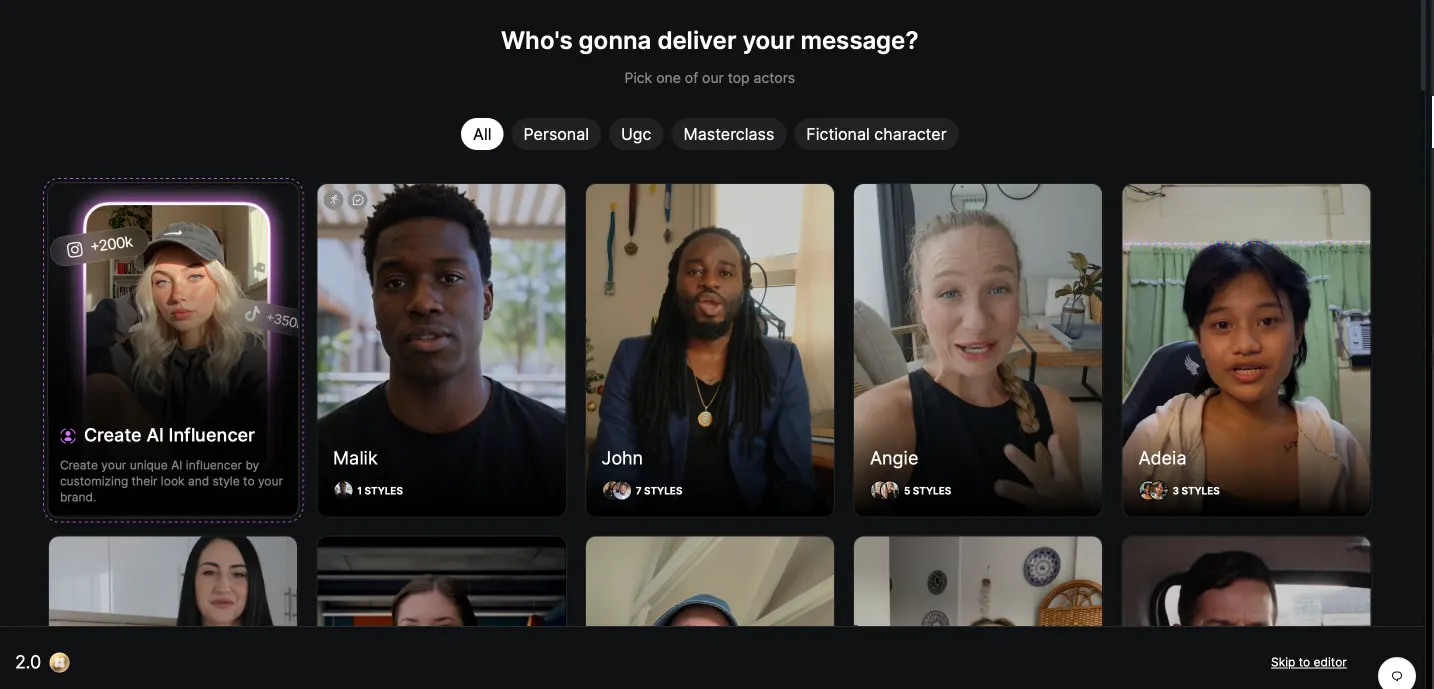

While everyone waits for Sora 2 to solve the Sora 1 moderation issue, platforms like Argil have already figured out what modern creators need.

With Argil, you can generate videos of yourself in under five minutes using AI clones – no camera setup, no moderation blocks killing your momentum and no waiting for beta access. The system gives you instant visual feedback and lets you edit in real time, all in one platform.

Moderation exists in Argil too, but it's designed to be creator-aware. It blocks genuinely harmful content while keeping your creative flow intact. You can adjust speech, tone and motion interactively without waiting for a full re-render.

And unlike Sora 2 (which most users are still waiting on), Argil works across multiple languages and integrates directly with social media platforms. You can create, edit and publish without bouncing between five different tools.

For creators tired of hitting walls with experimental platforms, Argil represents what practical AI video creation looks like in 2025. Sign up today and take advantage of our free trial.