What’s New in AI Video Generation? Key Trends and Tools to Watch in 2026

Read the latest AI video generation news to see what’s changing in the industry, as well as what this means for creators.

Read the latest AI video generation news to see what’s changing in the industry, as well as what this means for creators.

AI video generation news is changing rapidly, and over the last two years, we’ve witnessed some significant changes.

When AI video avatars first entered the headlines, the focus was firmly on the novelty of being able to create realistic moving images of artificial humans, not just the static pictures we’d seen so far. However, for creators and marketers who are using these tools every day, the conversation has moved on substantially since then.

AI avatar technology has evolved so quickly, that the discussion is no longer about whether AI can produce a realistic-enough avatar to replace you in video content, but whether the tools used can actually support creators throughout the entire scripting-to-publication workflow and help them produce content more efficiently.

Improvements in lip sync, voice realism, editing automation and script-to-video workflows have made all of this possible. And now, for creators, marketers and small teams, AI video is a day-to-day practicality rather than a novelty.

In 2026, short-form video continues to dominate attention across platforms, and marketers increasingly rely on it for reach and engagement. [Industry reporting](https://blog.hubspot.com/marketing/video-marketing-statistics?) consistently shows that video remains one of the highest-performing formats for engagement and conversion across social platforms.

So what’s the current state of AI video generation, and how is it impacting the creator economy? In this article, we’ll highlight five of the biggest changes to pay attention to in 2026.

One of the most noticeable developments in recent AI video generation news is the improvement in lip-sync accuracy. This is one area where, until now, avatar tools have struggled to impress – speech often felt delayed or disconnected from facial movement, which immediately broke viewer trust. Even small mismatches in lip syncing made videos feel artificial and completely undermined their credibility.

Modern tools now use more advanced phoneme alignment and frame-level synchronization, allowing avatars to match speech patterns far more closely. And this had a huge impact: when viewers trust what they are seeing, they stay longer, but when they sense something is off, they scroll away quickly.

Tools like HeyGen and Colossyan have helped push this improvement forward, and the result is that AI spokesperson-style videos are now viable for longer formats, not just short novelty clips. For creators working in industries like education, real estate or consulting, this has opened the door to using AI avatars in client-facing content without sacrificing credibility.

Argil builds directly on this progress by focusing on creator clones rather than generic avatars. Because our avatars are based on a real person’s speech patterns and delivery style, the result feels closer to natural communication than template-based output.

Another recurring theme in AI video generation news is the move away from generic presenters toward expressive, personalized avatars that can be used by influencers and content creators.

Early AI video tools relied heavily on stock faces and scripted delivery, which worked for training videos but struggled in creator-led environments where personality matters.

Creators increasingly want avatars that reflect their identity, tone, and audience expectations. Advances in facial animation and expression modeling now allow avatars to show subtle emotional variation, which makes videos feel more human. This has become particularly important for global creators producing multilingual content, where tone carries meaning beyond words.

Industry coverage has pointed out that representation and authenticity are becoming major drivers in avatar adoption, especially as AI tools expand globally. Reports like MIT Technology Review’s analysis of generative AI media trends highlight how users are moving toward systems that allow personalization rather than standardized outputs.

Argil fits into this trend by allowing creators to train avatars based on themselves rather than choosing from a library. The result is not just visual similarity but continuity of voice and presence across videos, which helps audiences recognize and trust the creator over time.

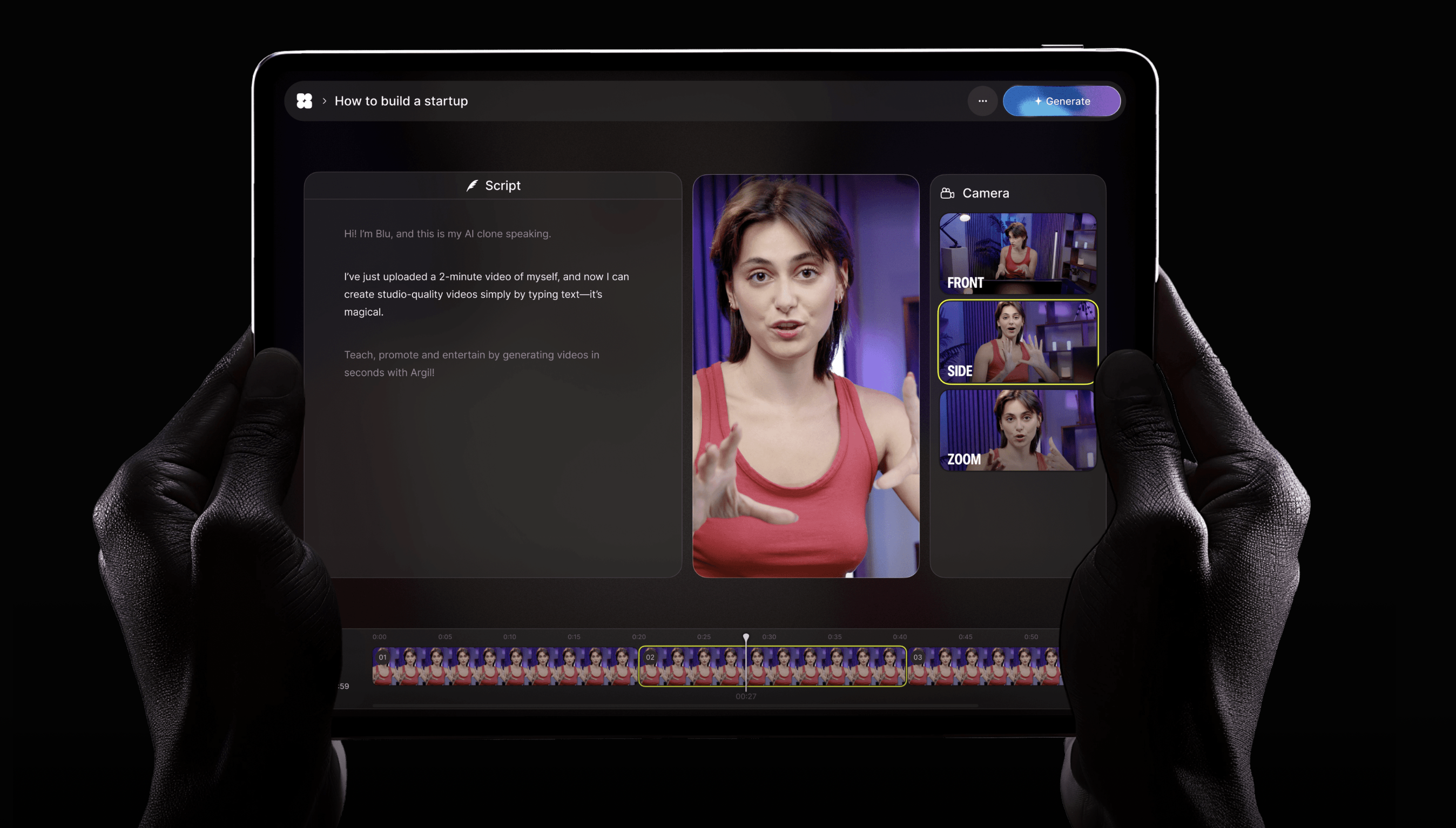

Perhaps the biggest structural change in AI video generation news is the rise of end-to-end workflows. In the past, creators often needed separate tools for scripting, voice generation, editing, captions and publishing – each step introduced friction, and many projects stalled before completion.

The current generation of tools aims to remove those gaps. Text-to-video workflows allow creators to move directly from script input to a finished video. Runway and similar platforms have pushed visual generation forward, while tools like Synthesia and Argil have focused on spoken avatar workflows.

The difference in 2026 is that creators expect these systems to produce content ready for distribution, not just raw output. Automatic captions, transitions and formatting for vertical platforms are becoming standard rather than premium features.

This change mirrors what happened in blogging years earlier. Once publishing became frictionless through drag-and-drop publishing platforms like Wordpress, content volume increased dramatically. AI video is moving in the same direction – the easier it becomes to publish consistently, the more valuable the workflow becomes compared to individual features.

Voice technology has also matured quickly. Early text-to-speech systems sounded technically accurate but emotionally flat. Recent advances focus on prosody, pacing and emotional variation, allowing voices to adapt to tone and context.

This has been widely discussed across AI video generation news because voice plays a major role in viewer retention. A realistic voice helps audiences stay engaged even when visuals remain simple.

For creators, the practical advantage is speed. Scripts can be adjusted and regenerated instantly without re-recording audio. Multilingual output becomes possible while maintaining the same voice identity across markets.

Argil uses this capability as part of a broader workflow. Instead of generating isolated voice files, Argil integrates voice cloning directly into video creation, allowing creators to iterate quickly while keeping delivery consistent.

Editing has traditionally been the biggest bottleneck in video production. Recording a video might have taken minutes, but editing could take hours – and that’s with a single take. This problem has historically limited output for many creators and prevented them from achieving their potential or monetizing their work.

Recent AI video generation news reflects a major change here. Automated editing, contextual B-roll insertion and templated formats are becoming standard features rather than advanced options.

Tools like Pictory and Runway have contributed to this change, but Argil pushes it further by integrating editing directly into the script-to-video flow. Captions, transitions and pacing adjustments happen automatically, allowing creators to focus on ideas rather than post-production.

This is especially important for industries where expertise matters more than visual polish. Real estate agents, lawyers and educators benefit from being present and consistent rather than perfectly produced.

For creators, agencies and small businesses, the takeaway from current AI video generation news is that the tools that win will be the ones that make publishing easier, not just visually impressive.

AI video stops being interesting when it works reliably and becomes part of everyday workflows, which is exactly where the industry is heading.

This is why platforms like Argil are gaining so much attention. They represent what AI video looks like when it moves beyond demos and becomes part of real-life creative work.

If you want to see how it works, sign up today and enjoy your first five days free.