What Is Metahuman Creator? Everything You Need to Know in 2026

Metahuman Creator is causing a splash in the video game world – but what is it, how is it used and what’s possible with these AI avatars?

Metahuman Creator is causing a splash in the video game world – but what is it, how is it used and what’s possible with these AI avatars?

If you’ve spent any time online researching digital avatars in the gaming niche, you’ve almost definitely come across Metahuman Creator. And if you're wondering what it actually does, who uses it and how it compares to other AI avatar tools, you’re in the right place.

In this guide, we’ll walk you through everything you need to know about Epic Games’ Metahuman Creator in 2026 — what it does, where it falls short and how it fits into the wider world of digital avatars.

We’ll also introduce you to Argil, our creator-first AI video production tool that provides a simpler alternative for influencers, entrepreneurs and content creators.

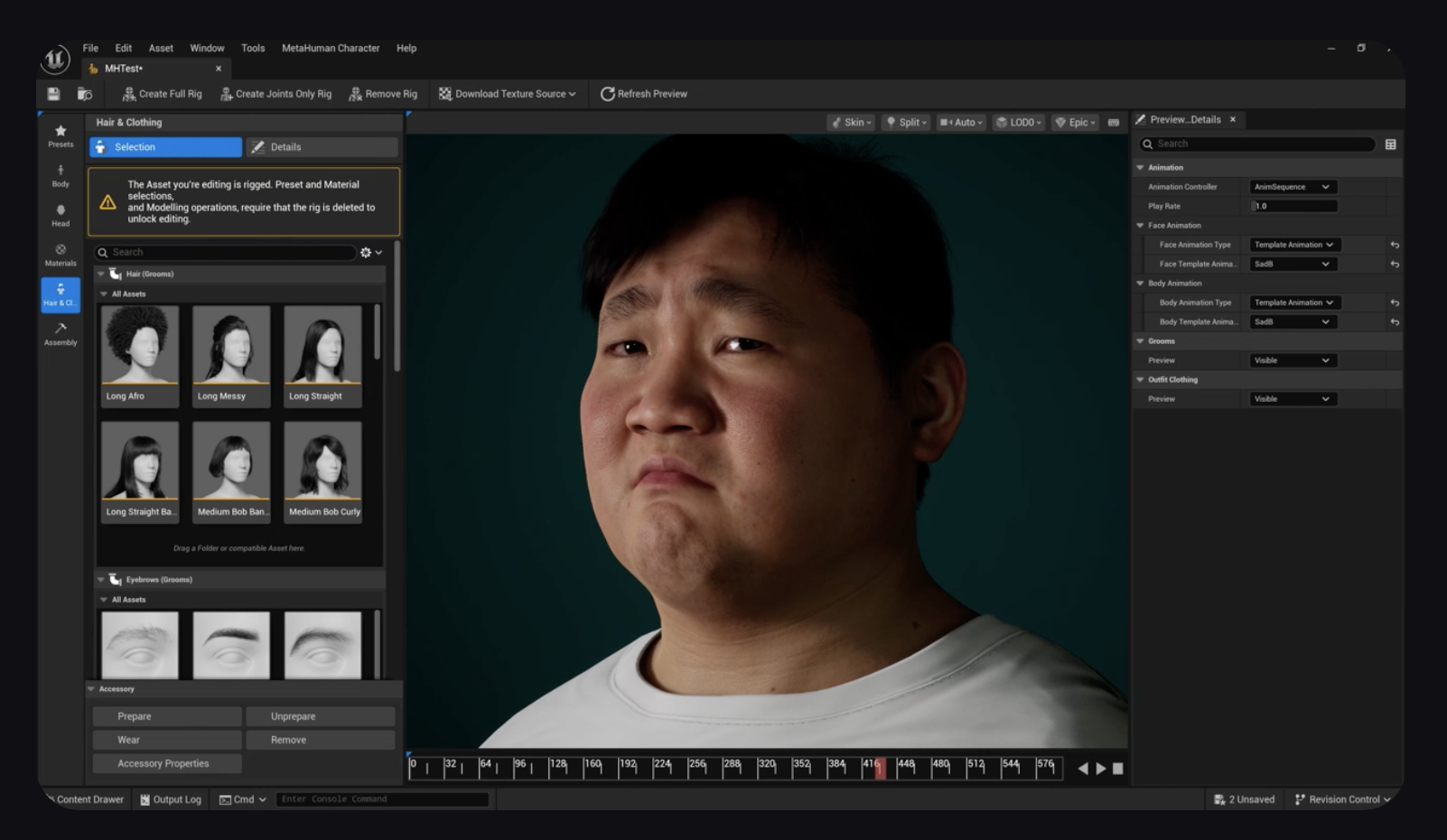

Metahuman Creator is a cloud-based character creation tool built by Epic Games, designed to produce incredibly realistic digital avatars. It sits inside the Unreal Engine ecosystem and has become the industry standard for game developers, virtual production teams and digital artists who need film-grade character quality.

When it launched in 2021, it felt completely inaccessible – like the kind of technology only large studios could use. But in 2025, Metahuman is being used by thousands of creators to build avatars for games, films, advertising and immersive experiences.

Unlike basic 3D models or VTuber-style characters, Metahumans aren’t static. They come fully rigged with facial blend shapes, body morphs and animation-ready skeletons. You can control them with facial motion capture, body mocap suits or Unreal Engine’s internal animation tools.

What’s more, everything is procedurally generated so creators can tweak details like skin and hair texture, pores, eye reflections, scars, facial asymmetry and micro-expressions – all without starting from scratch.

And because it’s tightly linked to Unreal Engine, it supports real-time rendering, mocap integration and advanced lighting systems like Lumen.

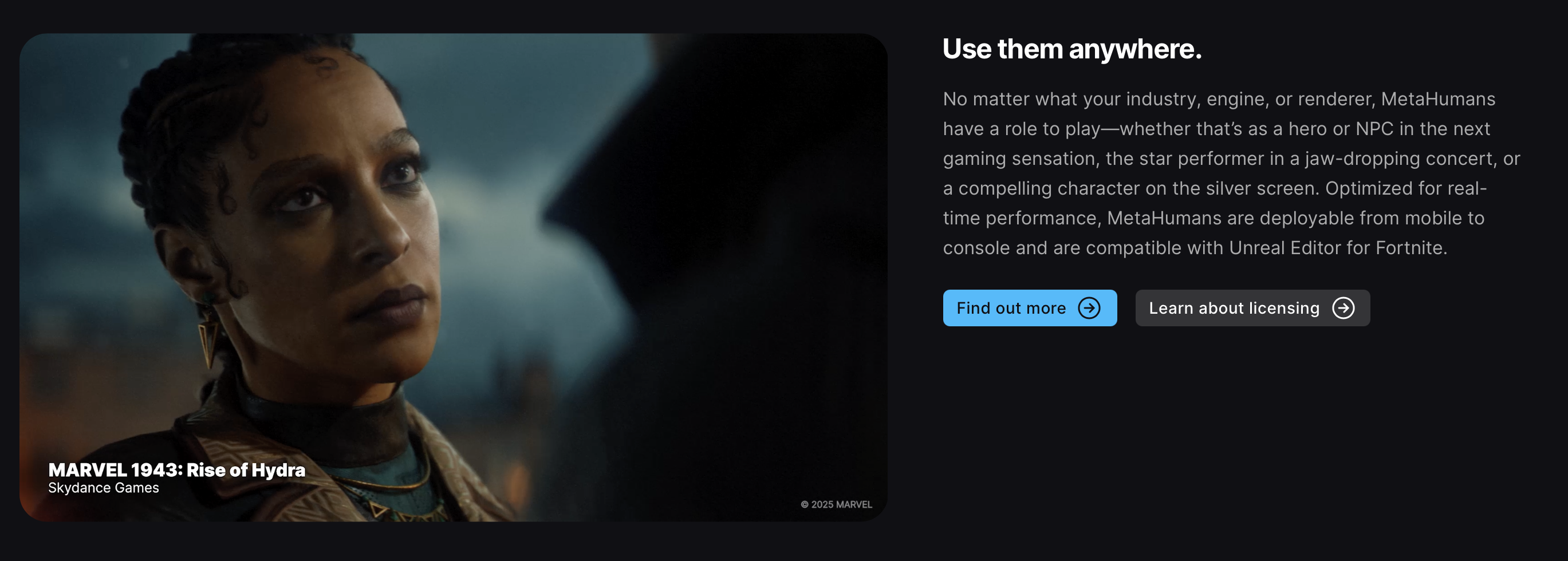

One of the biggest adopters of Metahuman Creator is the gaming industry. Developers use Metahumans for both main and side characters in AAA titles and indie games, largely because the tool simplifies character development that would otherwise take weeks of modelling.

Real-time facial animation and body rigging help studios create NPCs that feel alive, expressive, dynamic and emotionally believable.

In 2025, tools like Live Link Face, Faceware and Cubic Motion allow creators to animate Metahumans from an iPhone instantly, making the workflow much more efficient.

Several games and Unreal Engine UEFN experiences already use Metahuman Creator to prototype characters quickly. Paired with UE5 features like Nanite and Lumen, these characters blend seamlessly into next-gen game environments.

VTubing (the practice of online streaming with a virtual character instead of your own face) is no longer limited to anime-style avatars. A growing cohort of creators now want photorealistic or semi-realistic virtual identities, and Metahuman Creator has become a popular tool in this area.

VTubers often pair Metahumans with facial capture tools like ARKit or Faceware to create livestream-ready avatars that mirror real-time expressions. This opens the door for creators in tech, education, beauty and professional niches who want a more realistic presence without appearing on camera themselves.

Agencies are now building entire rosters of virtual influencers using Metahuman Creator, prototyping characters across ages, ethnicities and styles. Exporting to Unity, OBS or TikTok filters is possible (and increasingly common), although it still requires complex pipeline work.

Metahumans aren’t just used for entertainment. Studios like Weta Digital and teams behind Netflix productions use Metahuman Creator for digital doubles, previsualization and background characters. In advertising, realistic avatars help brands create spokesperson-style content without flying actors to sets or reshooting takes.

Corporate training departments also use Metahumans for simulations, internal explainers and digital walkthroughs. Architecture and automotive companies rely on them for product demos, safety simulations, and immersive customer experiences.

Metahuman Creator has effectively become a versatile tool that spans creative, corporate and technical industries – but is it all too good to be true?

For all its strengths, Metahuman Creator has significant limitations, especially for everyday digital creators or marketers who simply want to produce videos for social media.

Here are some of the biggest friction points:

To get the most out of Metahuman Creator, you need a solid understanding of Unreal Engine, rigging, retargeting, real-time pipelines, sequencing and animation tools. For complete beginners, this learning curve is steep.

MetaHuman Creator itself is fairly intuitive, but the tech stack you’ll need is fairly complex:

You can animate a Metahuman manually, but to achieve lifelike facial expressions, you’ll need to use motion capture tools like Rokoko, ARKit or Cubic Motion. These tools can be expensive and time-consuming.

Even with the MetaHuman Animator update, capturing full-body performance requires significant hardware and setup, which isn’t available to all creators – especially those who are strapped for time and resources.

Even experienced teams find that generating polished Metahuman videos is a long process. Shot planning, mocap recording, scene setup, lighting and rendering all take time, so creating ten or twenty videos a month (the typical output for brands or content creators) is extremely difficult without a studio environment.

Metahuman Creator doesn’t include built-in script editors, captioning tools, automated voice generation or one-click rendering. You have to manually assemble your videos inside Unreal Engine or another program. For creators who need speed, this is the biggest bottleneck.

While Metahuman Creator excels at realism and high-end production, it simply isn’t built for the everyday video creator. This is where Argil comes in.

Argil provides you with a realistic digital human to star in your videos, without all the complexity.

With Argil, you can turn a selfie video into a lifelike AI avatar with just your browser. No Unreal Engine, no mocap rig and no animation tools –you just upload your face, and Argil generates your avatar automatically.From there, you can turn scripts, blogs, newsletters or PDFs into fully edited videos with:

Everything happens in a single interface, and a finished video can be produced in under ten minutes. This makes Argil ideal for:

If you need to produce fast, scalable avatar-led content without a production team, Argil solves the exact problem that Metahuman Creator can’t.

While Metahuman Creator is built for artists and studios, Argil is built for creators, marketers and influencers who want to produce content at scale.

Realism still matters, but so does usability. As AI tools grow more advanced, the quality of AI avatars will only be one deciding factor between tools – it will also be about how quick, easy and intuitive that tool is to use for the average creator. And that’s exactly where Argil shines

Sign up today to create your digital avatar and start generating platform-ready videos in minutes. Your first 5 days are free!